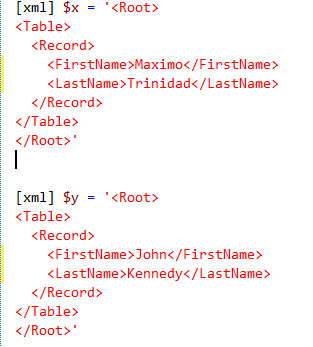

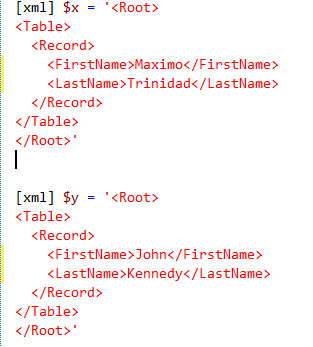

This topic came up after my SQLSaturday presentation in Tampa last weekend. In an scenario when (for some reason) someone is supplying an single XML file containing one series of information, or I should say, one record at the time. Maybe we can’t have the client to provide us with an XML file containing a series of records. This will force us to be creative and build a solution to accumulate and/or append all the individual XML files.Well, here’s how I think it can be done. I’m creating two PowerShell XML objects with one single record containing two fields: FirstName and LastName.

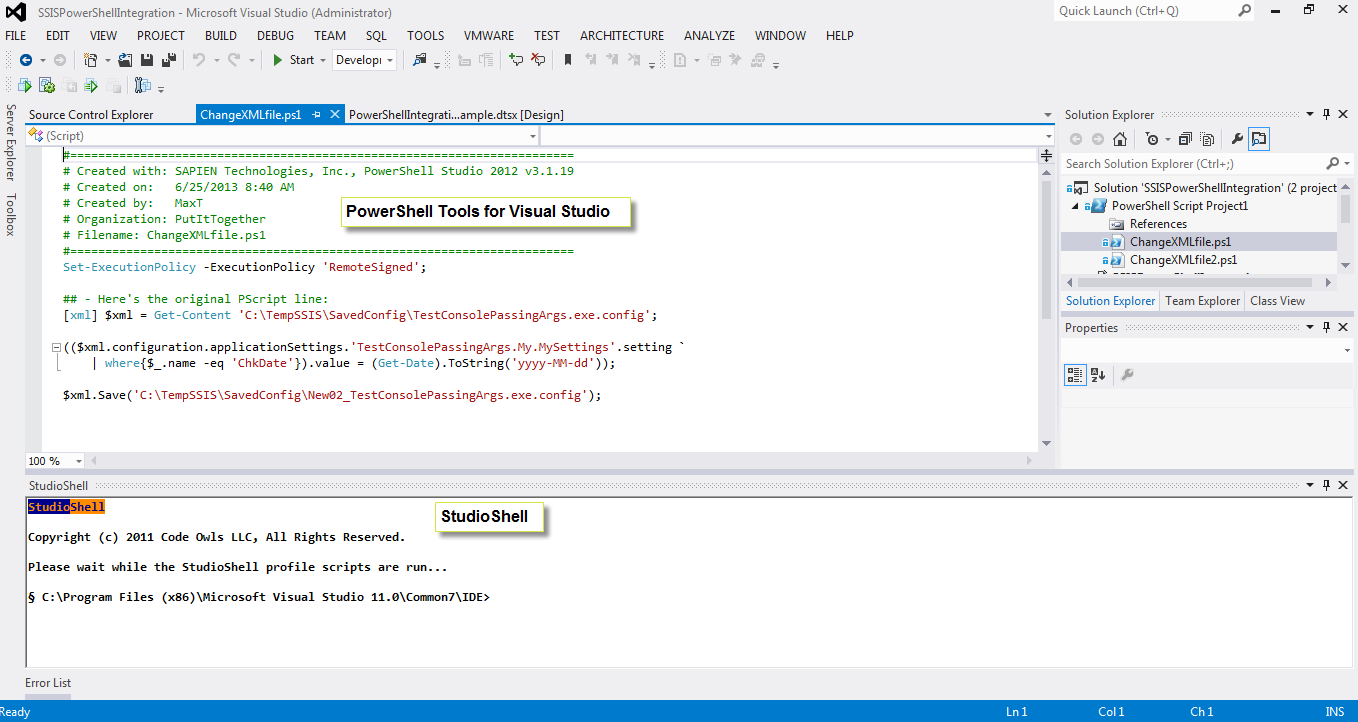

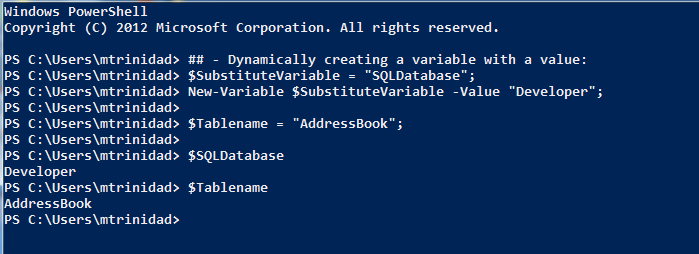

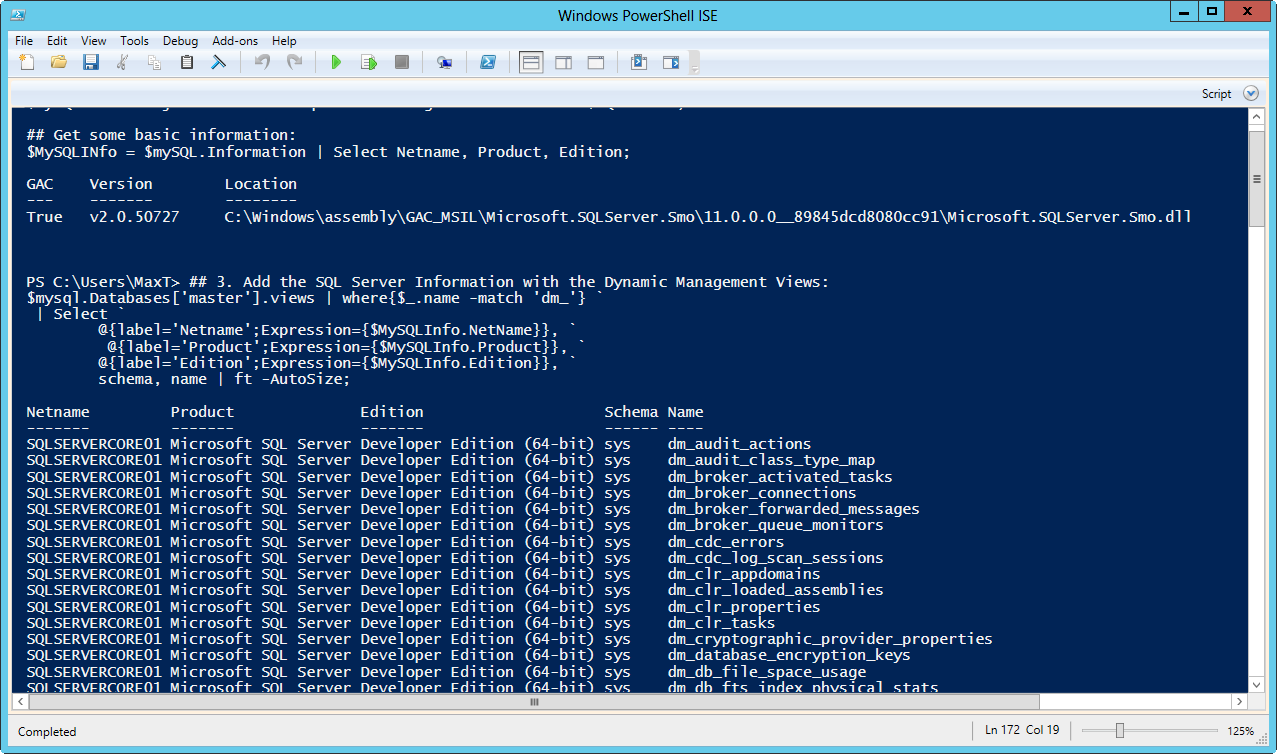

Here’s an image showing how to load an XML into a PowerShell object:

Notice I’m using the “xml” (inside square-brackets) accelerator to create the object of type ‘XML’. To verify the type of object use the following .NET object method ‘.GetType()‘.

PS C:\Users\Max> $y.gettype()

IsPublic IsSerial Name BaseType

——– ——– —- ——–

True False XmlDocument System.Xml.XmlNode

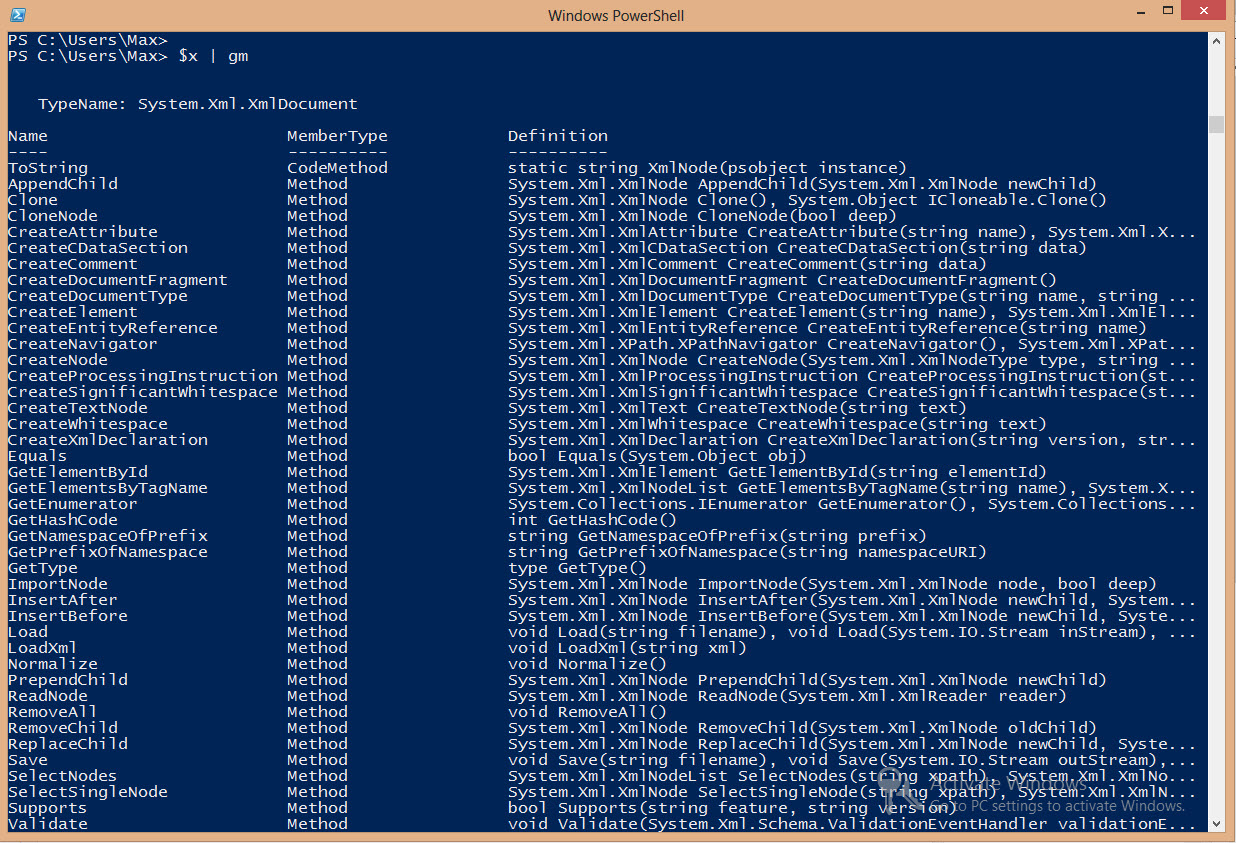

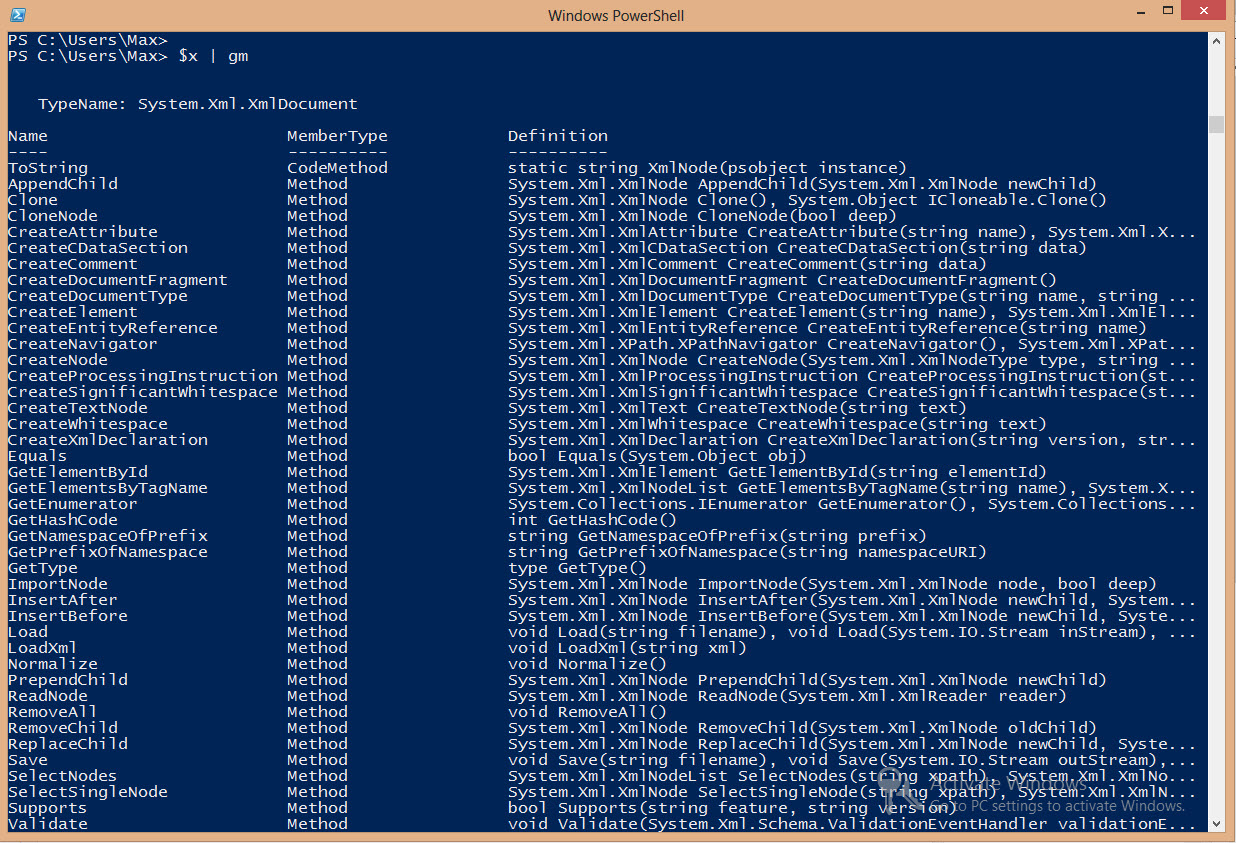

If you want to find more information about the PowerShell object you created use the ‘Get-Member‘ command to all of its Methods and Properties.

$x | Get-Member;

Now we have our two XML objects created: $x and $y. We want to add the record information from $y into $x. First, we need to make sure that the XML structure are the same in both objects:

Now we have our two XML objects created: $x and $y. We want to add the record information from $y into $x. First, we need to make sure that the XML structure are the same in both objects:

PS C:\Users\Max> $x.Root.Table.Record

FirstName LastName

——— ——–

Maximo Trinidad

PS C:\Users\Max> $y.Root.Table.Record

FirstName LastName

——— ——–

John Kennedy

As you can see both XML objects contains the “Record” elements content: FirstName and LastName.

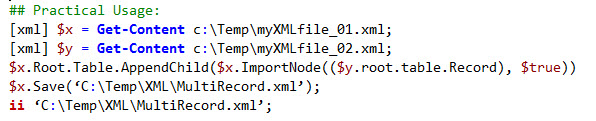

So, instead of processing these two objects separately, we are going the extract the “Record” elements from $y and inserted into $x. We can accomplish this process in two steps:

1. Creating another object that will hold the extracted elements:

$z = $x.ImportNode(($y.root.table.Record), $true);

2. Use the ‘.AppendChild()‘ method to add the extracted elements to the destination XML object (ie. $x):

$x.Root.Table.AppendChild($z);

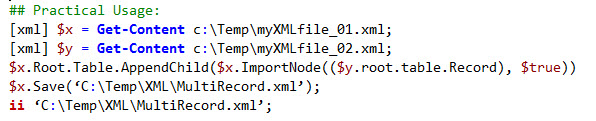

Or, you can simply create a oneliner command to do this:

$x.Root.Table.AppendChild($x.ImportNode(($y.root.table.Record), $true));

To verify that all the “Record” elements from $y has been included in $x we can use the following command:

PS C:\Users\Max> $x.Root.Table.Record

FirstName LastName

——— ——–

Maximo Trinidad

John Kennedy

As you can see, we have successfully added the “Record” elements data.

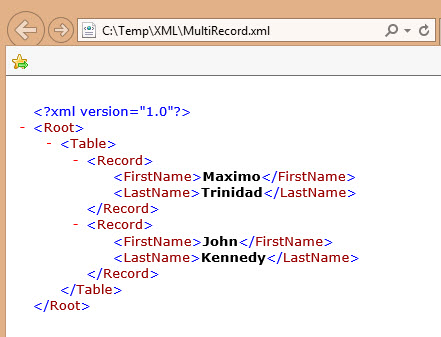

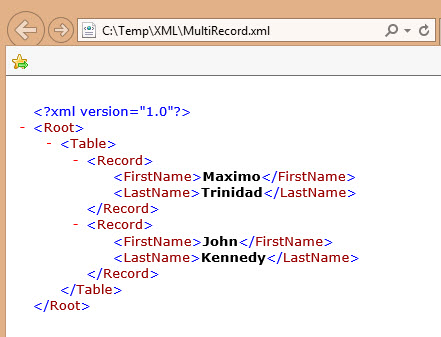

Now, all this information have been modified in memory. To save this object to a file, then we use the ‘.Save()‘ method:

$x.Save(‘C:\Temp\XML\MultiRecord.xml’);

Finally, to display the content of the file, use the ‘ii‘ which is the Alias for ‘Invoke-Item‘ command:

ii ‘C:\Temp\XML\MultiRecord.xml’;

As you can see we have added new records from one XML into another. Here’s the end result: