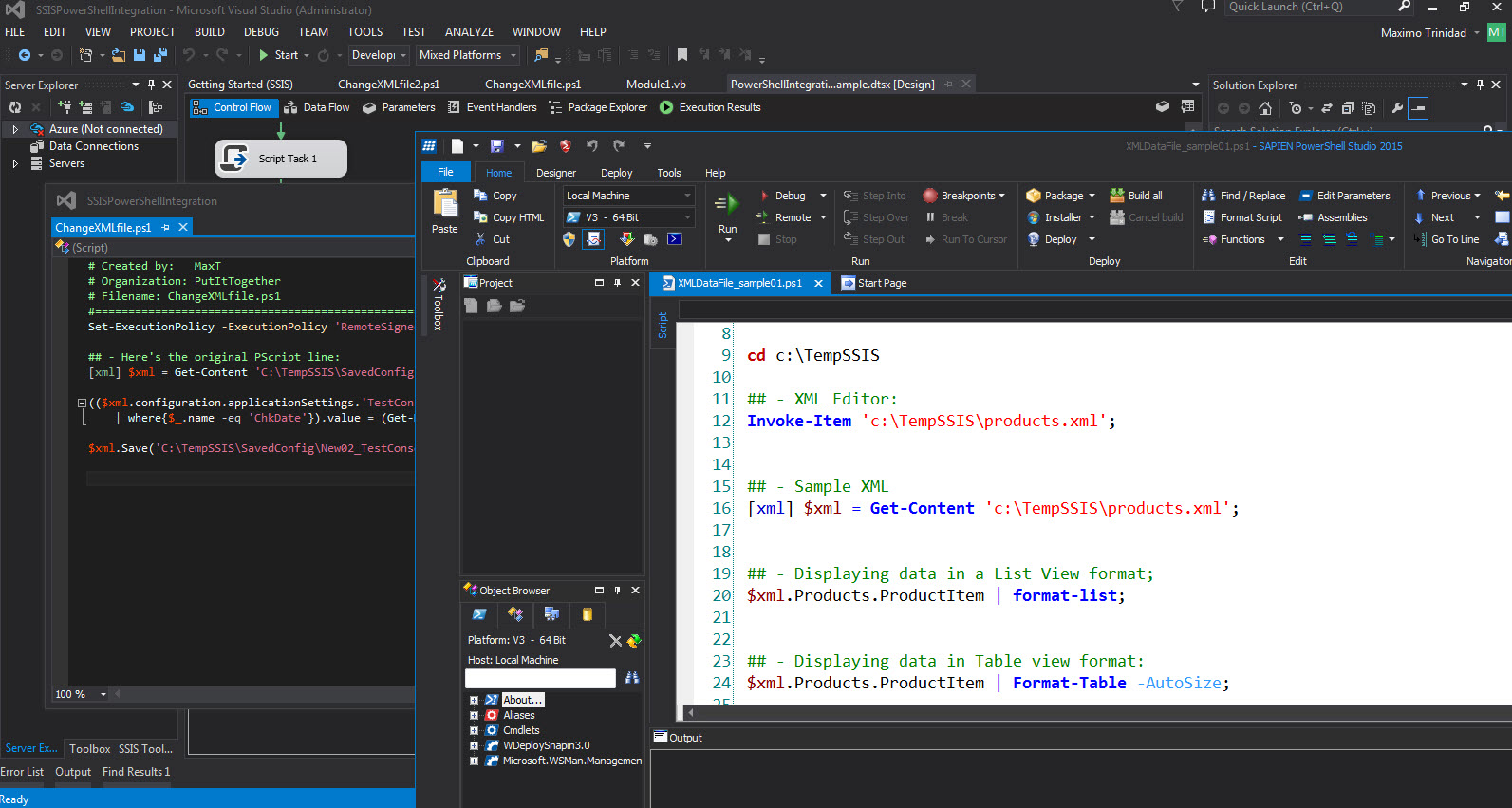

I love working with PowerShell. Yes! I admitted. And, I love using SAPIEN Technology “PowerShell Studio” product and specially when I can develop a productive windows application for our Data Center team to use. This make my and their job easy to handle.

I just develope a Windows application to handle our company tables updates which monitor failures and recovery via PowerShell. The SAP 3rd party vendor application our team are using is old and doesn’t give any productive way to handle failures and recovery for these nightly tasks. And, here’s where PowerShell comes to the rescue.

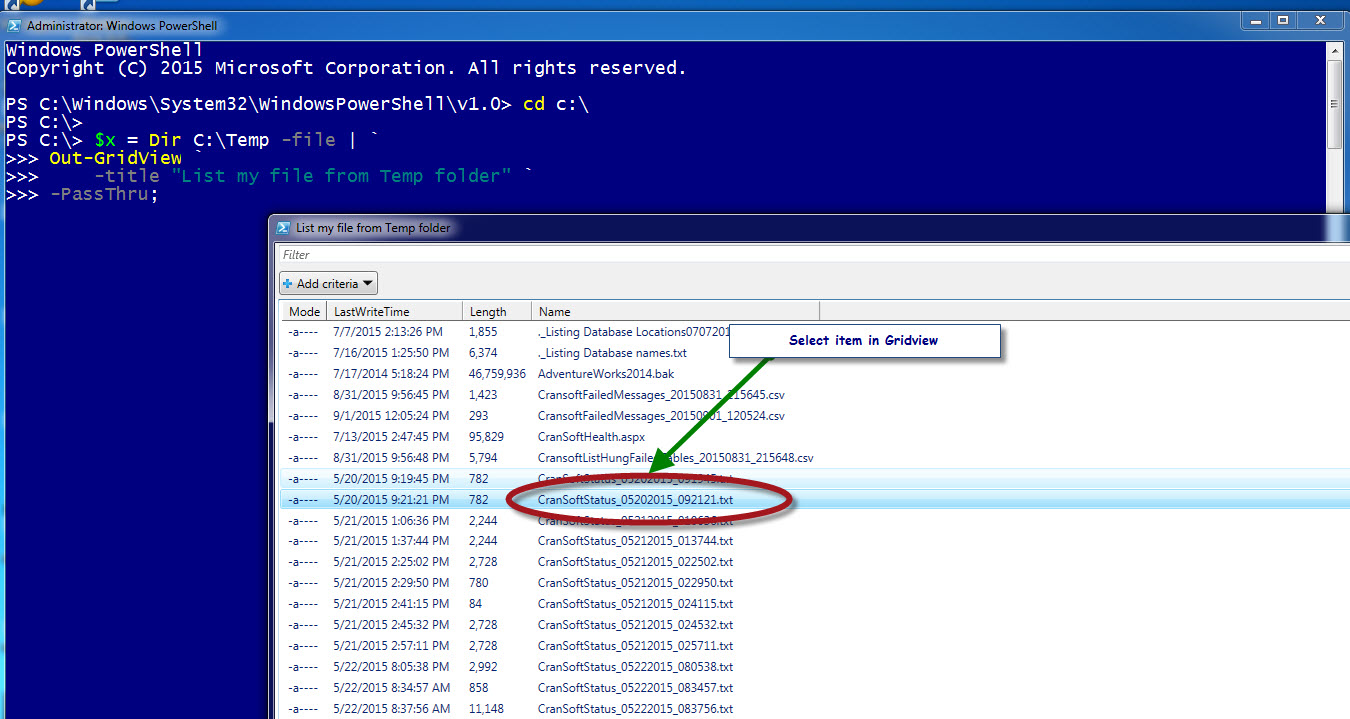

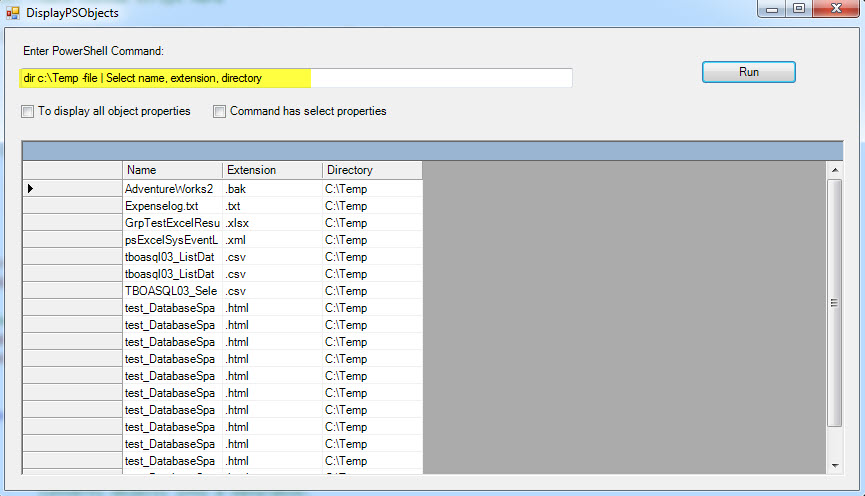

So, one on the main components in building this Windows application is the use of the Datagrid. As PowerShell has a very useful Out-Gridview cmdlet but SAPIEN product gives you the .NET Visual Studio look-a-like feel for developing Windows Application.

The recent enhancements to the Out-GridView cmdlet has made it powerful and very useful command:

1. The use of filtering the data.

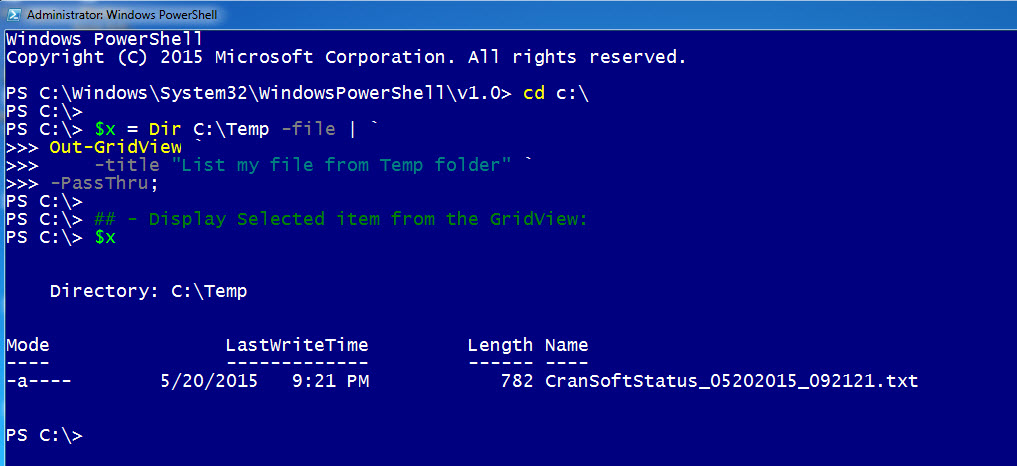

2. The use of the ‘-passthru” parameter so you select one or multiple items.

3. The use of the “-title” parameter.

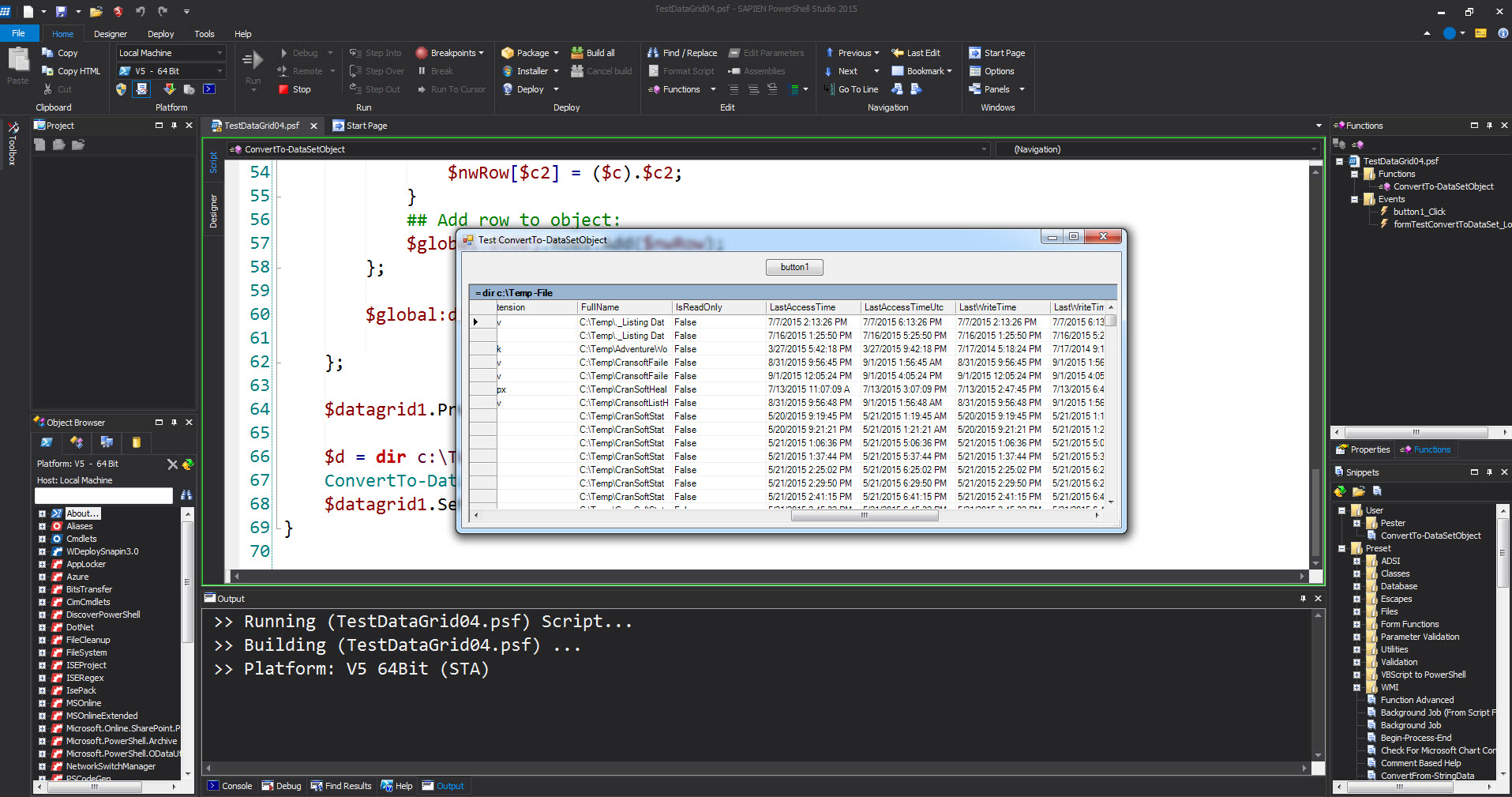

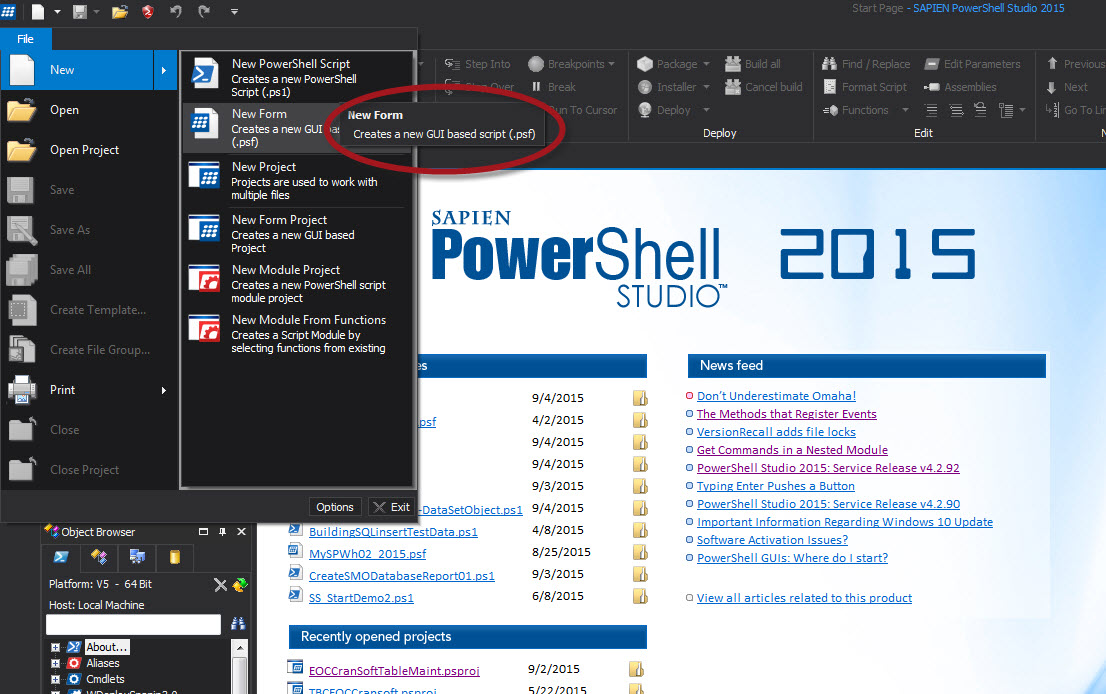

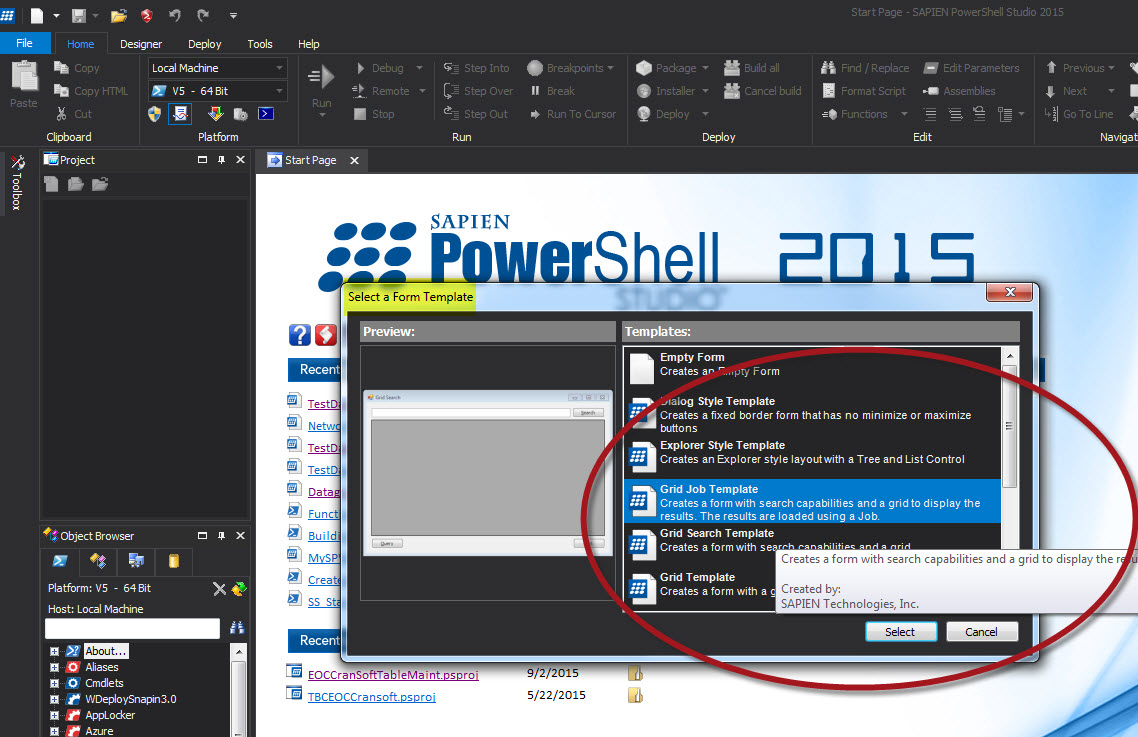

Now, getting back to using a Datagrid in a SAPIEN PowerShell Windows Application. PowerShell Studio give us the ability to build Windows application with PowerShell code. For a person that have never use Visual Studio, the learning curve is short thanks to all the Wizards this product have included to get you started.

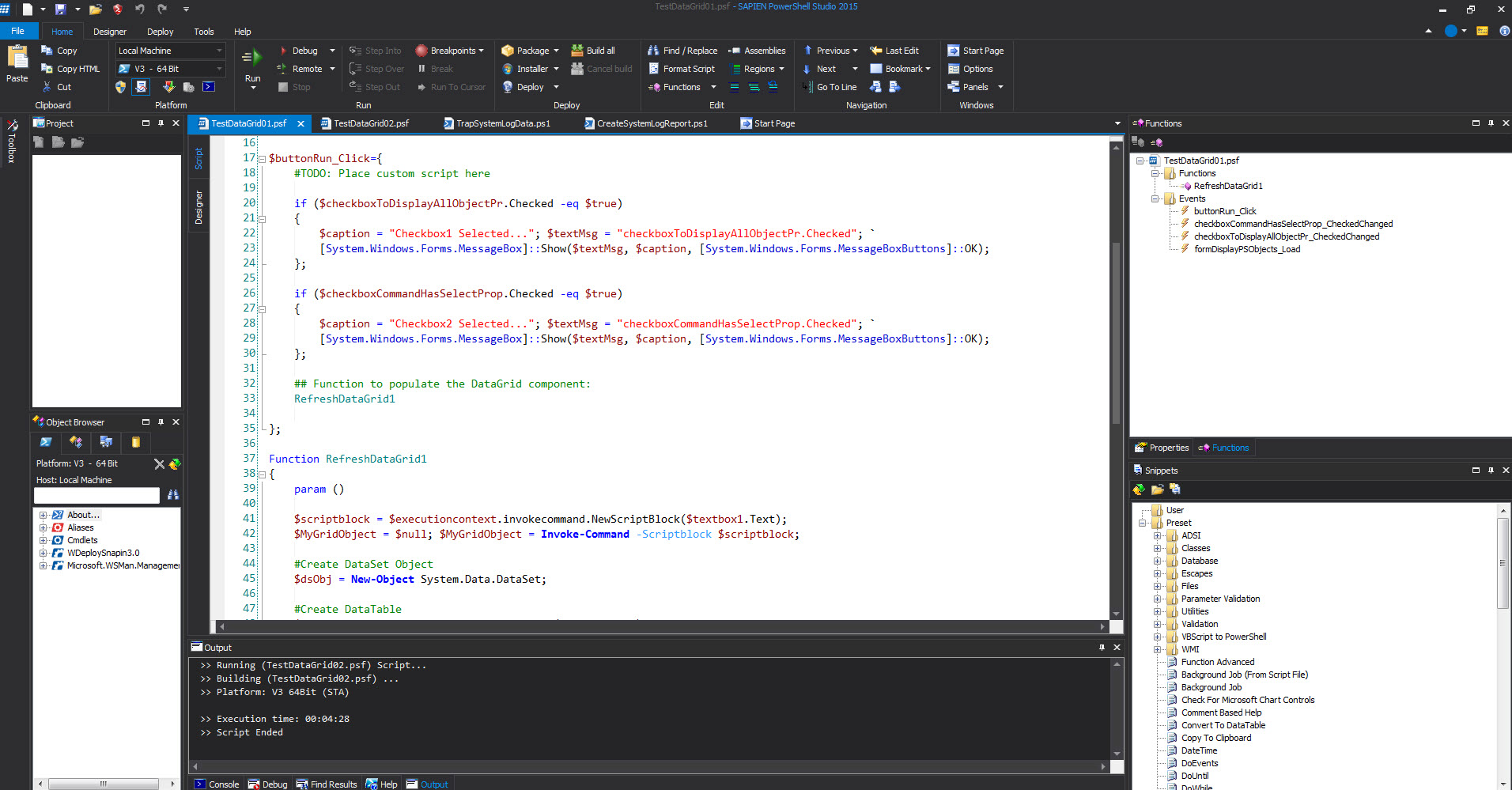

Now, sometime ago I posted a blog about creating a Windows application with a datagrid. Here’s the link:

1. http://www.maxtblog.com/2015/04/playing-with-powershell-studio-2015-windows-form-12/

2. http://www.maxtblog.com/2015/04/playing-with-powershell-studio-2015-windows-form-22/

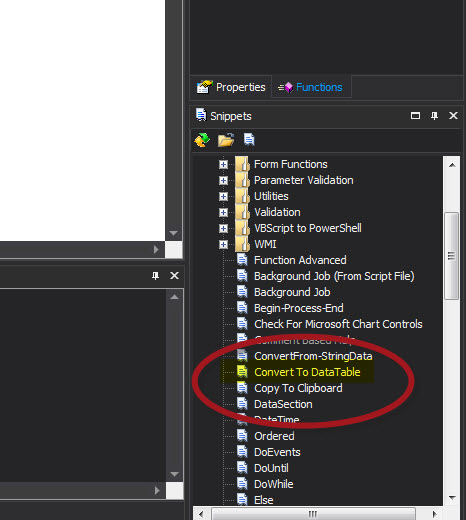

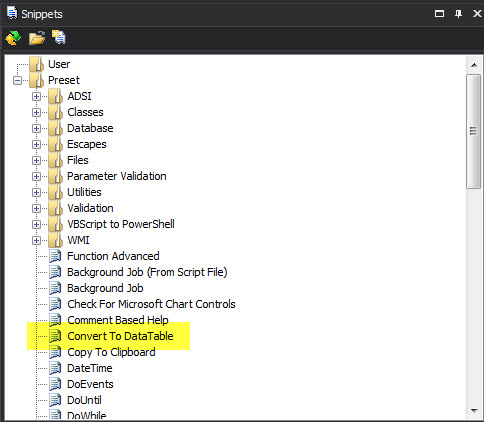

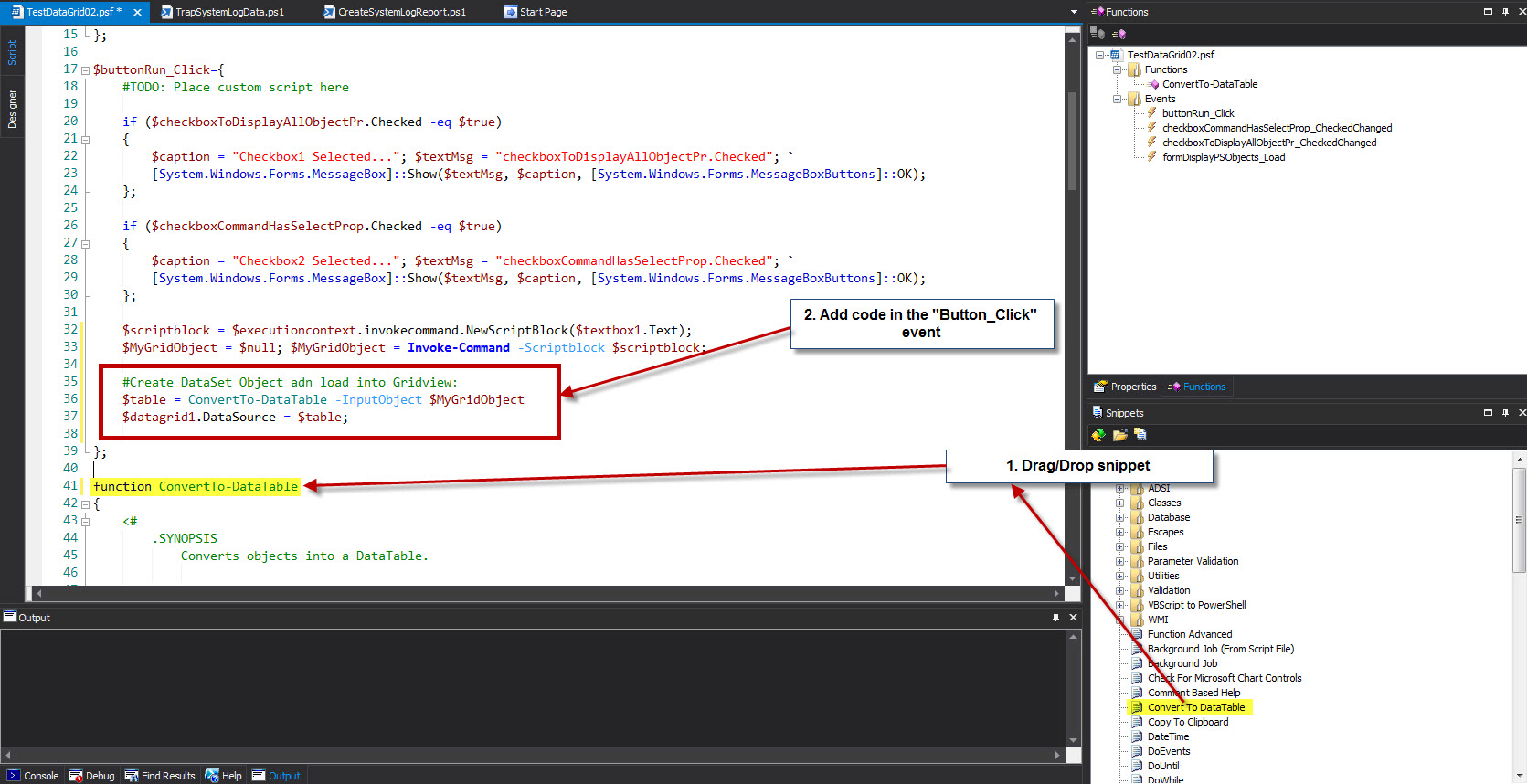

After I posted the above blog articles,my MVP friend June Blender, remind me that SAPIEN had a snippet “ConvertTo-DataTable” which can be use to convert the PowerShell object to be loaded to the Datagrid. Here’s the blog link:

http://www.maxtblog.com/2015/05/using-powershell-studio-2015-snippet-sample/

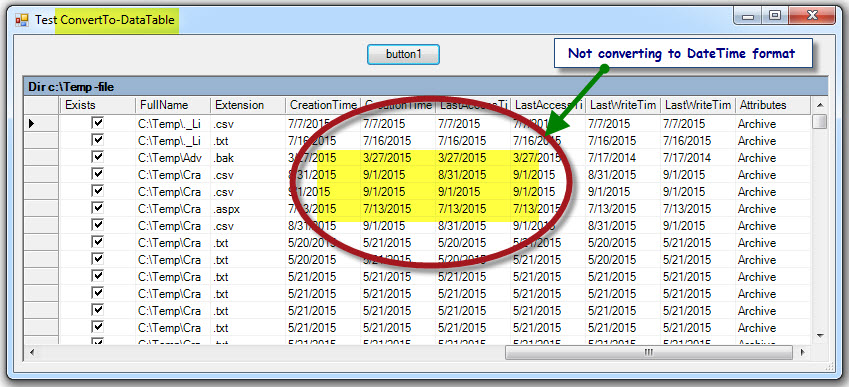

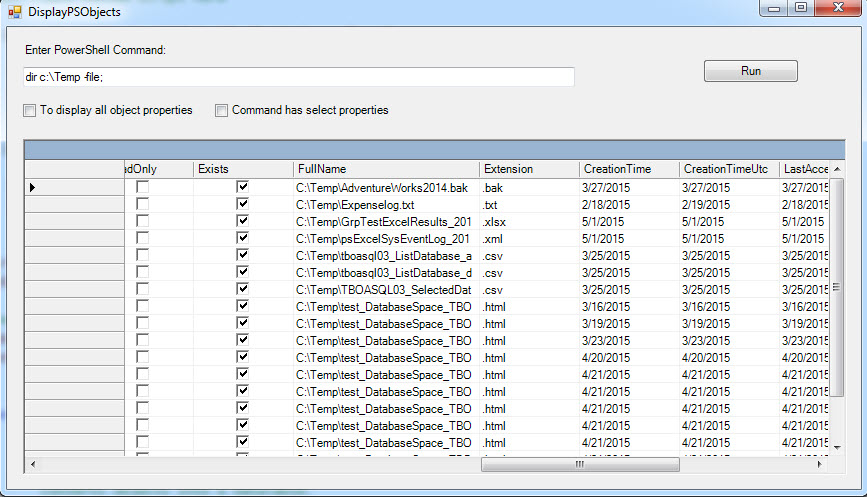

But just recently, while build more applications with Datagrid, I discover that the DateTime value returned in thedatagrid is incomplete and only shows the date.

So, I went back to my own version that build the object for the datagrid to verify that the datetime values are retained.

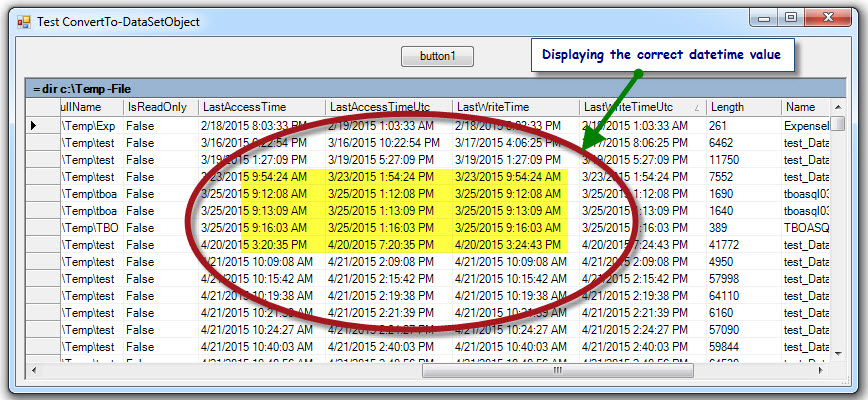

Then, I decided to create a function “ConvertTo-DataSetObject” which is a smaller version and seem to work with ‘datetime‘ values loaded to a Datagrid.

Then, I added the function to my User snippet section. Here’s the function “ConvertTo-DataSetObject” code snippet:

[sourcecode language=”powershell”]

function ConvertTo-DataSetObject

{

<# .SYNOPSIS Convert an object to a dataset object for a datagrid. .DESCRIPTION Convert an object to a dataset object for a datagrid by create a global variable "$global:dsObj". .PARAMETER InputObject This parameter will accept any PowerShell variable. .EXAMPLE PS C:\> ConvertTo-DataSetObject -InputObject $psObject

.NOTES

This function is useful when creating object for a datagrid component.

#>

Param (

[object]$InputObject

)

#Create DataSet Object

$global:dsObj = New-Object System.Data.DataSet;

#Create DataTable

$global:dtObj = New-Object System.Data.DataTable("PSObjTable");

$dtCols = $global:dtObj.Columns; $dtRows = $global:dtObj.Rows;

## – Populate Columns

foreach ($c in ($InputObject | gm -MemberType ‘*Property’).Name)

{

$x = 1;

($global:dtObj.Columns.Add().ColumnName = $c);

};

## – Populate Rows:

foreach ($c in $InputObject)

{

## Initialize row:

$nwRow = $global:dtObj.NewRow();

## Data

foreach ($c2 in ($InputObject | gm -MemberType ‘*Property’).name)

{

$nwRow[$c2] = ($c).$c2;

}

## Add row to object:

$global:dtObj.Rows.Add($nwRow);

};

$global:dsObj.Tables.Add($global:dtObj);

};

[/sourcecode]

Here some additional tips when working with datagrid component using the following:

In the Datagrid properties:

1. Add a descriptive text under Captiontext.

2. Set the ReadOnly to True.

Using code to resize all column before display the datagrid:

[sourcecode language=”powershell”]

$datagrid1.PreferredColumnWidth = ‘120’;

:

ConvertTo-DataSetObject -InputObject $d;

$datagrid1.SetDataBinding($dsObj, "PSObjTable");

[/sourcecode]