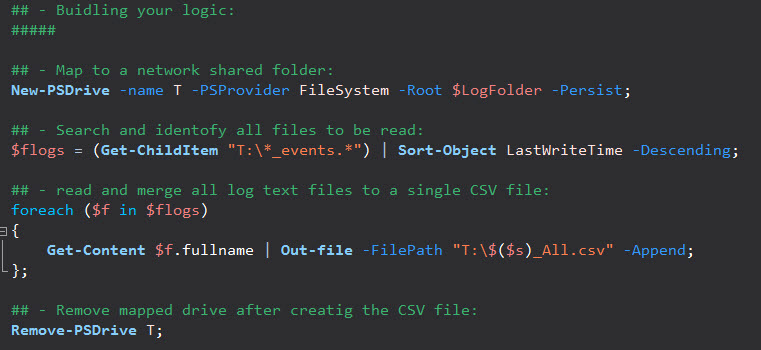

Series 2 – Building your data object

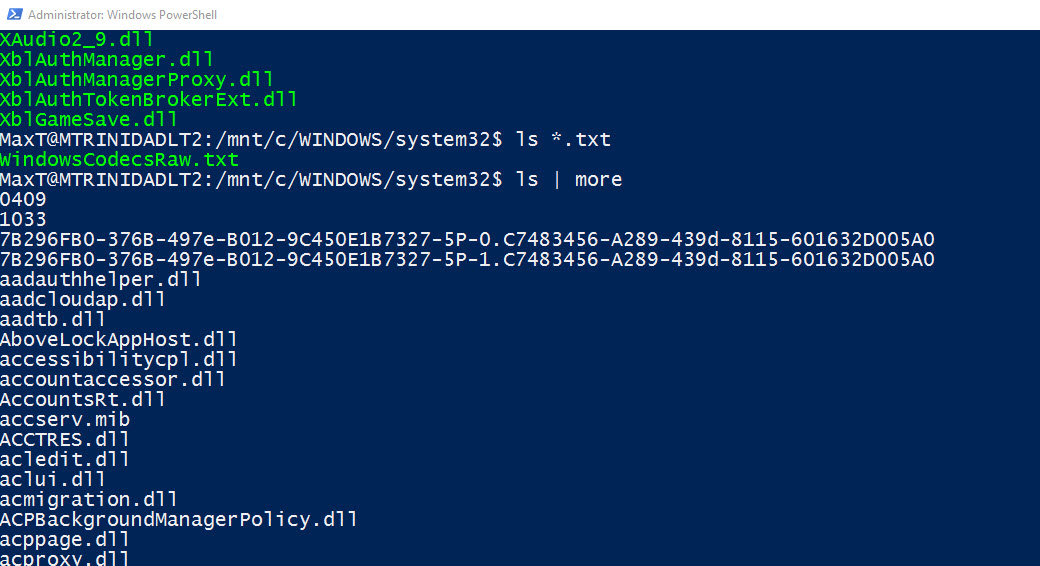

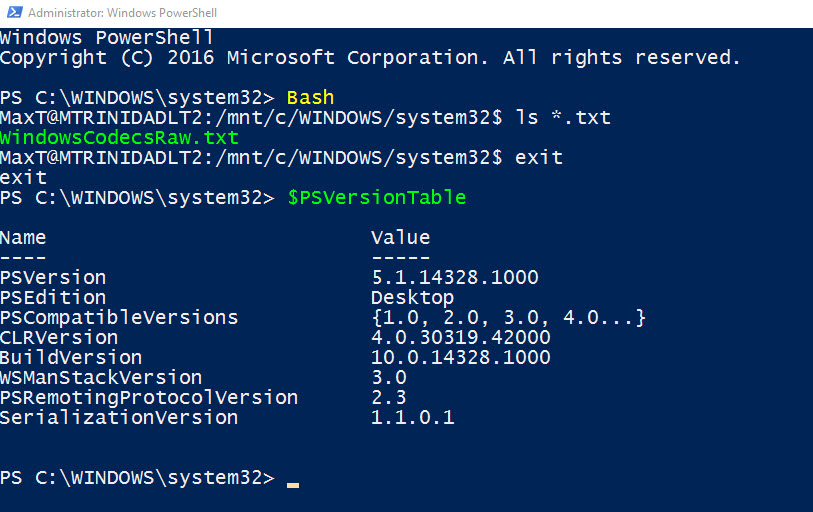

In the first part of the series we mapped to a server folder, and merge all needed log text files into a CSV type. Now, we need to analize the data we have collected so we can determine the correct course of action. This way we can work in creating our script solution to load this information in a SQL Server table.

Before analyzing our data we are going to create our data by creating a PowerShell object using the “Import-Csv” cmdlet. Keep in mind, if your CSV file is on a network drive then you’ll need to point to the folder. For most cases you’ll start creating CSV file on your local drive.

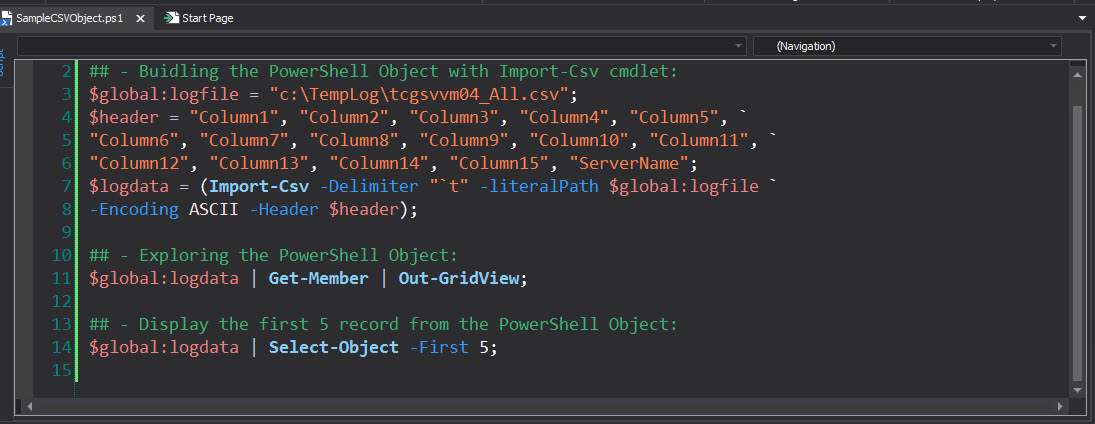

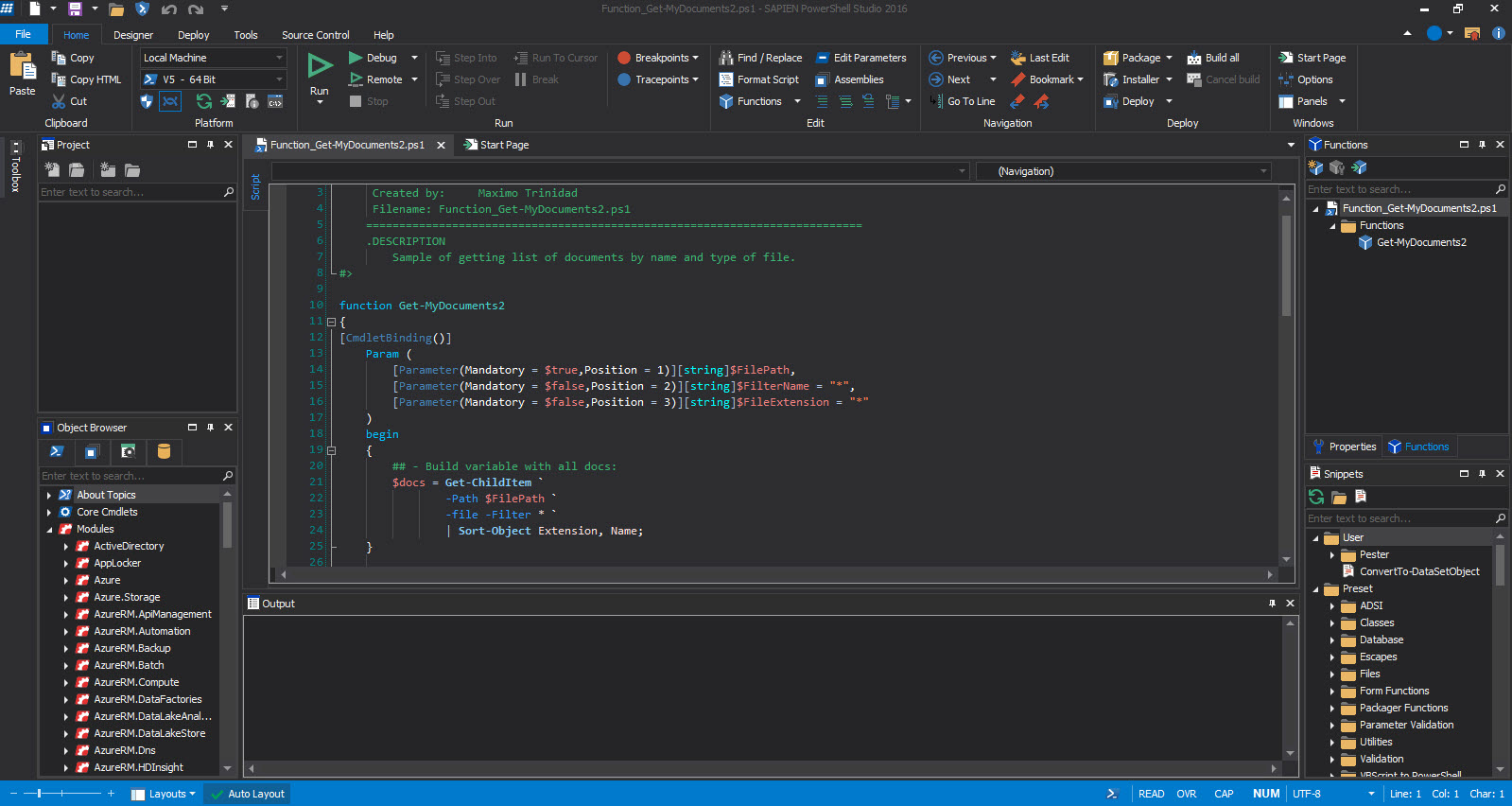

Creating your CSV data object is easy. Follow the sample:

[sourcecode language=”powershell”]

$logdata = `

(Import-Csv -Delimiter "`t" -literalPath $logfile -Encoding ASCII -Header $header) `

| Where-object{ $_.Column1 -gt "$($sqlresult.Tables.LastWriteTime)" };

[/sourcecode]

The above sample gives you a look on what’s possible to do with such a command line in the script. Basically, at the same time while importing the data from a CSV file, I’m also querying it to look at a particular column containing a date field. The other global variable comes a result set from another query done against a SQL table. The end result is to only create the $logdata with the dates we want to load into a SQL table.

PowerShell Object Column headers

If you notice, in the above cmdlet the where-clause I’m selecting to use the Column1 property instead of a reasonable label. In my scenario the data in the CSV file contain variable columns fopr its different data types such as: Info, Error, and System. So, it was easy to identify the total number of columns to be 15 columns.

Now, using the cmdlet “Import-Csv” using the parameter “-Header”, you can define a list columns when you build the $Logdata object. We create the $header variable with the column-names separated by comma.

[sourcecode language=”powershell”]

$header= "Column1", "Column2", "Column3", …"Column15";

[/sourcecode]

Then, we can incorporate this $header variable in the *”Import-Csv” cmdlet in the following way:

*Note: Keep in mind, you must define the delimiter use in the CSV file.

[sourcecode language=”powershell”]

$logdata = (Import-Csv -Delimiter "`t" -literalPath $logfile -Encoding ASCII -Header $header)

[/sourcecode]

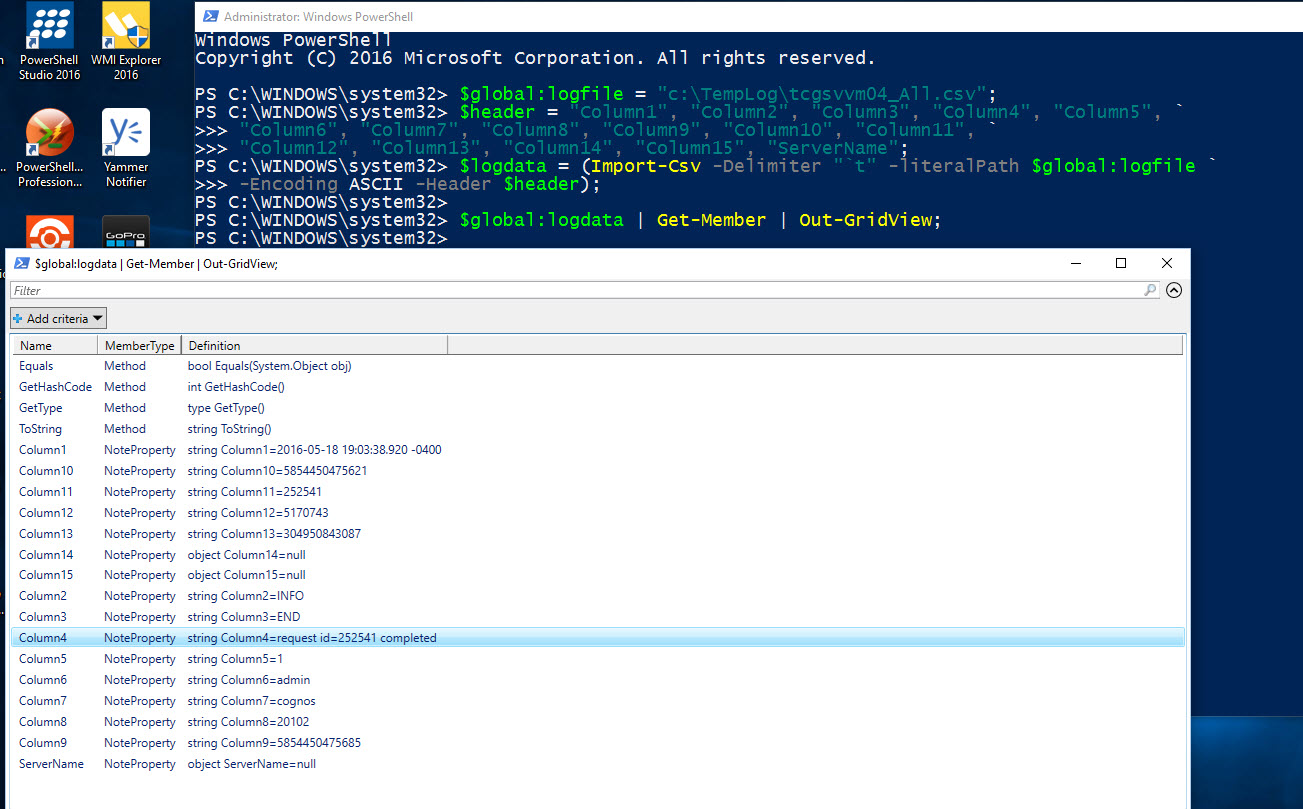

Next step after building the $logdata object is do the analysis on what you’re going to import into your SQL Server table. To start exploring any PowerShell object use the Get-Member cmdlet in combination with the Out-Gridview cmdlet. This combination will be use all the time while you develop and test you scripts.

[sourcecode language=”powershell”]

$logdata | Get-Member | Out-Gridview;

[/sourcecode]

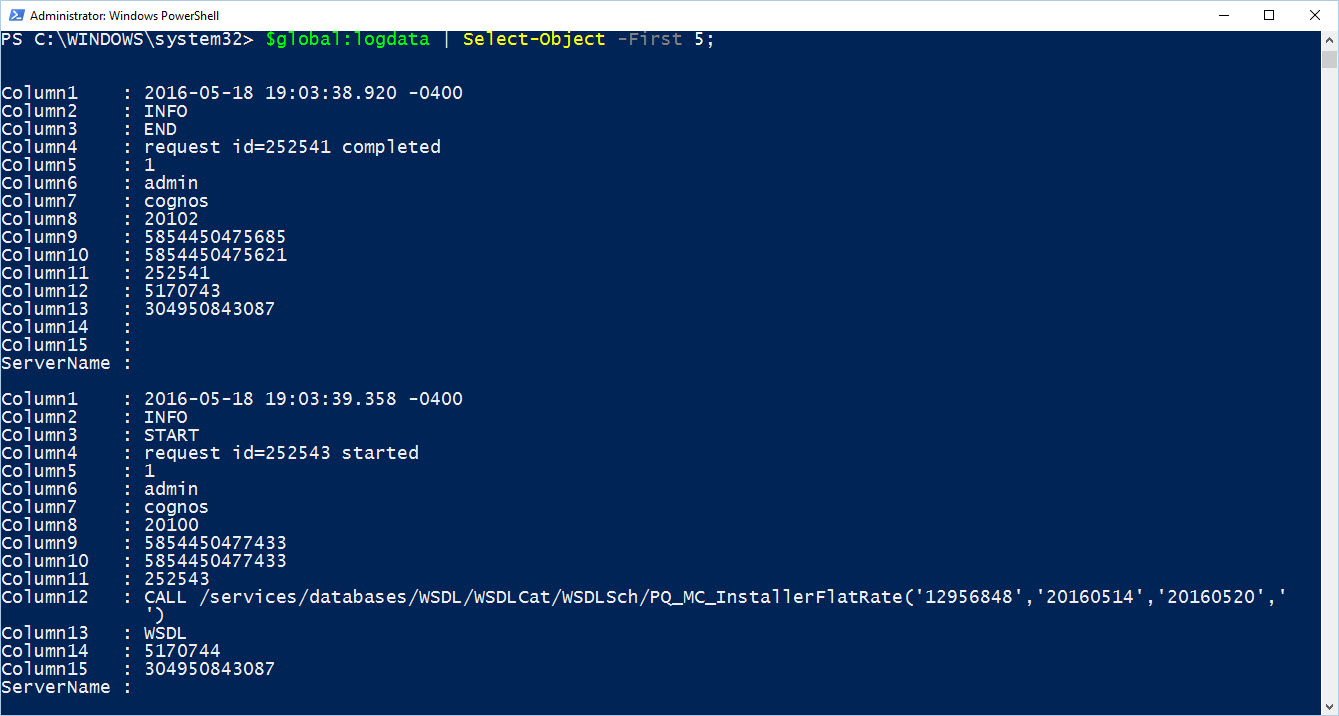

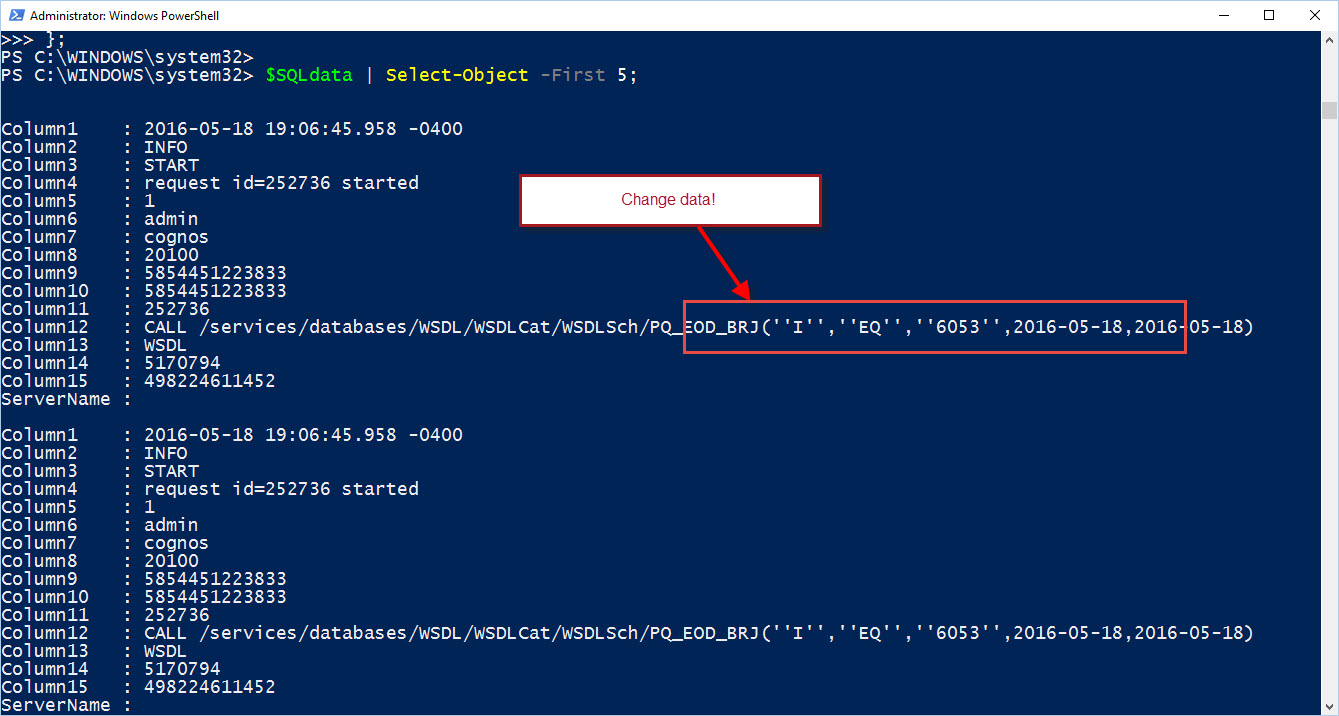

Displaying the $logdata object but only the first five data object:

[sourcecode language=”powershell”]

$logdata | | Select-Object -First 5;

[/sourcecode]

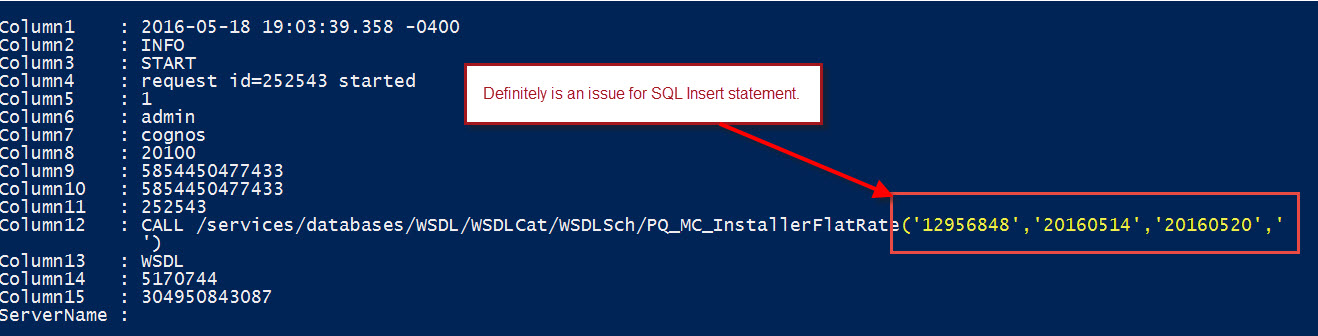

This is the part where you’re going to spend some time. In figuring out what possible issues you’ll find to import this data into your SQL table. Things like having both a single and double quotes will break your SQL Insert statements. So, look carefully and take the right action to transform this piece of the data.

The following sample code snippet have help in converting to a proper format before using the SQL Insert statement.

[sourcecode language=”powershell”]

## – Step to change data that has Single or Double quotes:

$SQLdata = $null;

$SQLdata = foreach ($l in $global:logdata)

{

## – Sample snippet for replacing Single or Double-quotes before SQL Inserts:

if ($l.Column12 -ne ‘Admin’)

{

$l.Column4 = $l.Column4.replace("”", "””").replace("’ ", ‘" ‘).Replace(" ‘", ‘ "’).Replace("’", ‘"’);

if (($l.Column3 -eq ‘START’) -or ($l.Column3 -eq ‘END’))

{

if ($l.Column12 -ne $null)

{

$l.Column12 = $l.Column12.replace("’", "”");

}

else

{

if (($l.Column12 -ne $null) -or ($l.Column12 -ne ‘Admin’))

{

if ($l.Column3 -eq ‘FAILED’ )

{

$l.Column12 = $l.Column12.replace("’ ", ‘" ‘).Replace(" ‘", ‘ "’);

}

else

{

$l.Column12 = $l.Column12.replace("”", "””");

}

};

};

};

## – To output with reformated data:

$l;

}

};

[/sourcecode]

Here’s the changed data object information in Column12 only if Column3 has a “Failed” value. I will cover more in the next blog I’ll where I show how to construct the SQL Insert statement.

What’s Next?

Next blog I’ll be covering importing the $logdata to a SQL Server table reaching its final destination.